This is an archival copy of the Visualization Group's web page 1998 to 2017. For current information, please vist our group's new web page.

|

Deep Sky Project UpdateContents |

Introduction

Deep Sky is a collaboration between the

Computational Cosmology Center

at the Lawrence Berkeley National Laboratory, the

National Energy Research Scientific Computing Center (NERSC),

and many universities and other research institutions.

The overarching goal of Deep Sky is to develop an astronomical image database

of unprecedented depth, temporal breadth, and sky coverage, starting with

the images collected over the seven-year span of the

Palomar-Quest and

Near-Earth Astroid Tracking (NEAT)

transient surveys.

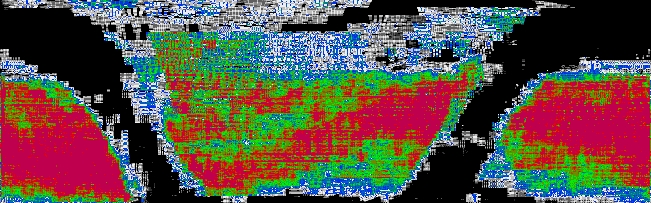

This set of images covers approximately 20,000 square degrees on the sky

with ten to 100 pointings at any given set of sky coordinates (see the image

below).

We currently have approximately nine million images stored on the NERSC

Global Filesystem.

|

|

Sky coverage of images from the alomar-Quest and NEAT transient surveys. The gray-white coloring represents about 30 pointings, blue on the order of 70 pointings, green about 100, and red about 200. |

How the images are being processed and are co-added to produce 'deep reference' images is described in a self-guided demo written for SC07. To date, over four million images have been processed; we expect to complete the processing of the original set of nine million images by the end of 2008 and then to begin producing deep reference images for every square degree of the sky for which images are available.

Update

During the past year, in addition to improving the algorithms used to process and co-add the images, we implemented using a database to store information about the processed and deep reference images. Not only will the database facilitate the production of deep reference images (once all of the raw images have been processed), but it also will allow us to assess the quality of the images by analyzing processing parameters and image characteristics. We are using Postgres as the database management system (DBMS). Postgres provides transaction management and foreign key constraints, and in the testing we did prior to making a choice of DBMS, we found that the performance of Postgres was better than MySQL when using InnoDB tables.

Postgres supports geometric types and operators that facilitate and simplify finding images that contain a given point (i.e., specified ra and dec values) or that overlap a given region (i.e., a square region centered on a point specified by ra and dec values). The images whose records are stored in the database are close to being, but are not exactly, rectangular; therefore, we store the image footprint using the Postgres polygon geometric type. To find images that contain a given point, we use the contained in operator ("<@"), and to find images that overlap a given region, we use the overlaps operator ("&&"). The contained in and overlaps Postgres geometric operators use the minimum bounding box of the image footprint to determine intersections.

In order to speed up the query, we define an image index based on the ra and dec values of the center of the image. This index value is created when the record for an image is inserted into the database. A table INDEX has been created on the image index column. When searching for images that include a specified point, a 'cover set' of images is determined by identifying the set of image indexes for images that are likely to contain the specified point, i.e., images with image indexes close in value. Only images in the cover set are tested using the (appropriate) geometric operator to see if they meet the criterion (contains or overlaps). This reduces the number of images to be tested from millions to hundreds.

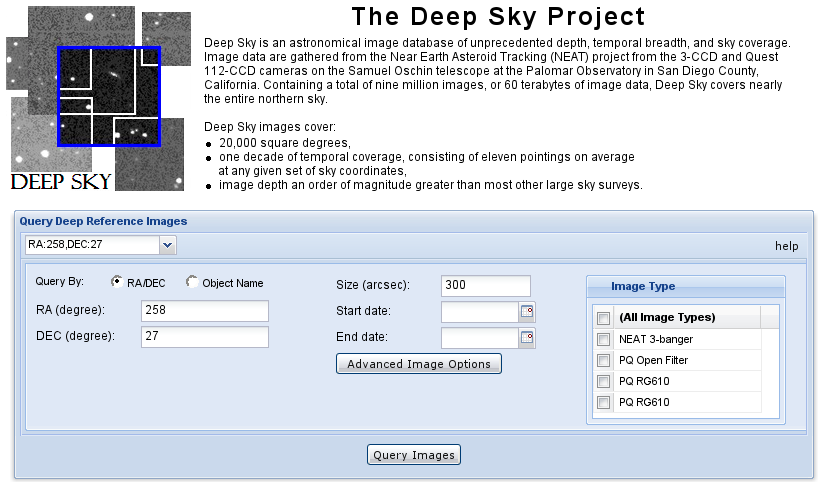

In addition, we have developed a prototype Web interface (see the screenshot

below) to provide

public access to the deep reference images and component images that

are co-added to generate the deep reference images.

The interface was developed using

Ext JS, a cross-browser JavaScript library.

To retrieve images, the user enters ra and dec values and the

size of the region (centered on the input ra and dec values).

Optionally, the user can reset image processing parameters by

clicking on the "Advanced Image Options"; these parameters are passed

to the stiff image processing program. After the user clicks the

"Query Images" button, the database is queried to find all images that

contain the input ra and dec values.

The swarp program is used to resample and co-add the retrieved FITS

image files, and the stiff program is used convert the FITS files

to tiff image format. The deep reference image and the component images

that were co-added to produce the deep reference image are then displayed

(not shown).

|

|

Screenshot of the Deep Sky Project protoype interface for searching the Deep Sky image database for deep reference and processed images. |