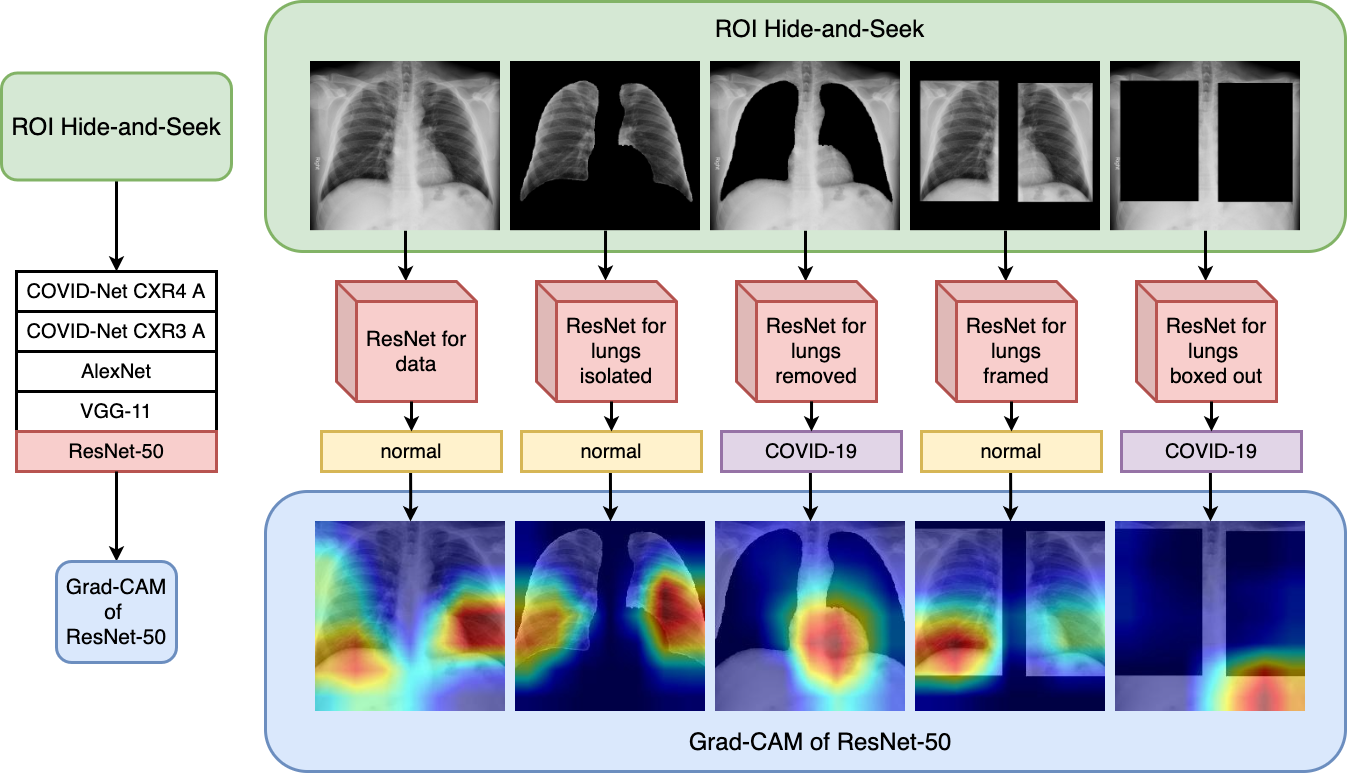

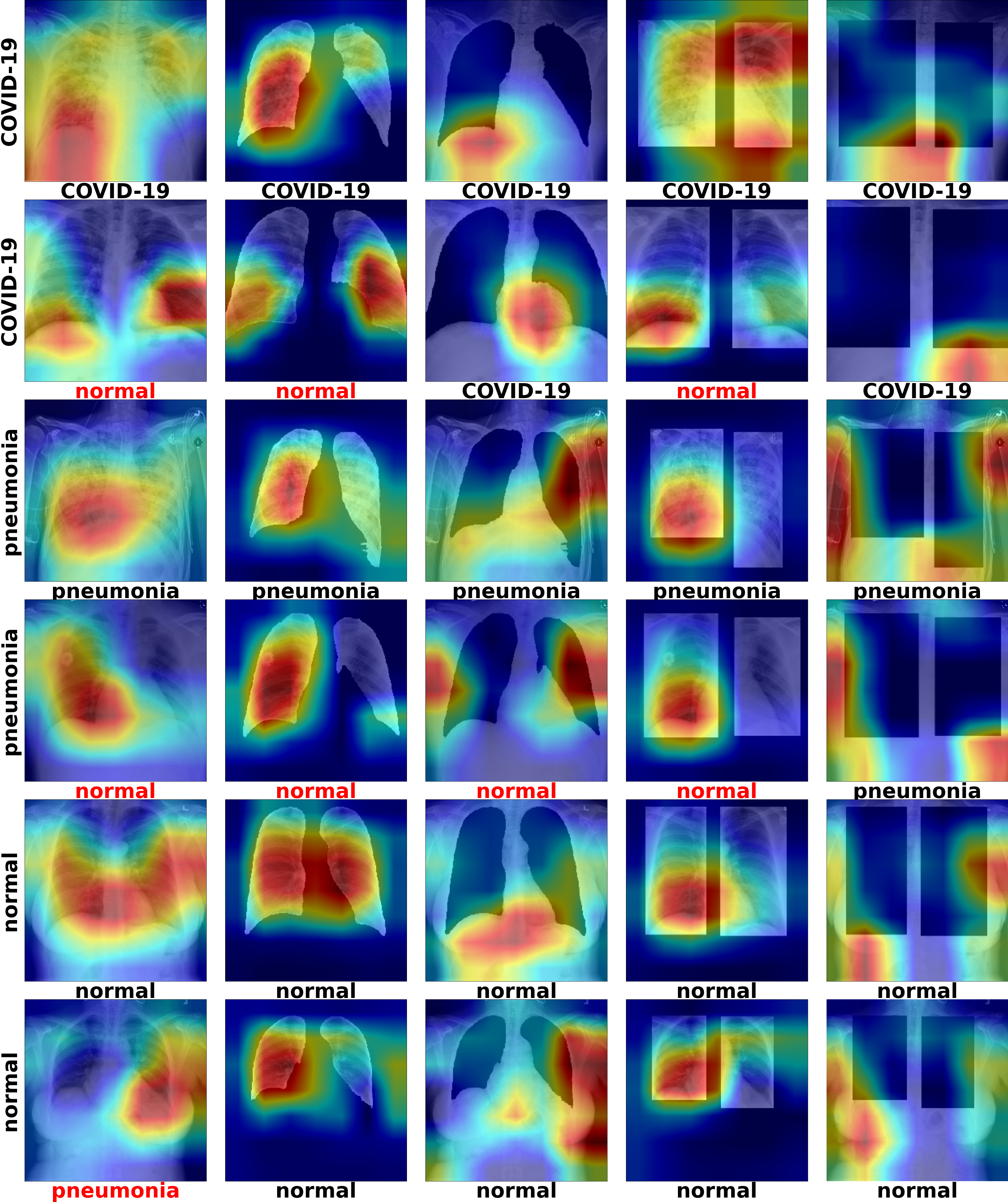

(Left) ROI Hide-and-Seek protocol to inspect deep learning architectures. (Right) Grad-cam maps to improve interpretability of deep learning results.

New methodology [1] for validating and verifying results of classification tasks associated to chest radiographs creates new avenues and insights for understanding deep neural network learned functions. ROI Hide-and-seek method increases transparency of deep learning models by measuring classification accuracy after hiding key areas in medical images.

Neural Networks often operate as black boxes, but understanding the logic in their decision making is critical in verifying that the objective function is learned. The ROI Hide-and-seek protocol is a method of verifying an arbitrary image classification task. It hides the region of interest, in this case, the lung of an image and performs the classification into one of 3 classes: pneumonia normal and COVID-19. What was surprising is that the performance remained high despite the fact that important parts of the image were removed.

Screening of chest X-rays for COVID-19 became a very common application of deep learning networks. Our new protocol offers a systematic procedure for validating and verifying the classification results of neural networks by using segmentation before classification. Next, it seeks to verify whether the network accuracy is affected, for example, is it less accurate when lungs are removed? By segmenting the ROI of an image, we are able to verify if the networks still successfully learn the classification task. The results showed that despite lung removal, deep learning models were still highly accurate, bringing further questions on what role different data sources were playing in the classification task.