This is an archival copy of the Visualization Group's web page 1998 to 2017. For current information, please vist our group's new web page.

Streamline Integration using MPI-Hybrid Parallelism on a Large Multi-Core Architecture

Problem Statement and Goals

Current CPU technology is evolving to use an increasing number of cores per processor. While current supercomputer CPUs have between two to six cores per processor, trends suggest that future processor architectures will contain between tens to hundreds of cores. This hardware approach gives rise to an important software question: which parallel programming model can effectively utilize such architectures?

The traditional approach is to use the Message Passing Interface (MPI), where each instance of the parallel program is an MPI task. With this approach, it is possible to use all the cores on a processor by having each MPI task mapped to core. As the number of cores per processor increases, this approach may not prove feasible due to a combination of MPI overhead and inability to take advantage of the fact that the multiple cores have access to a single, shared address space memory.

An alternative approach, known as hybrid parallelism, uses MPI parallelism across nodes, or processors, but then uses some form of shared-memory parallelism across cores within a processor. This approach, although more challenging to implement, can result in significant performance and efficiency gains, such as the two-fold speedup for hybrid-parallel volume rendering reported by Howison et al. 2012 [2]. While that study focused on hybrid parallelism for volume rendering, little is known about hybrid parallelism for other types of visualization algorithms, such as integral curve calculation, which lies at the heart of many flow-based methods.

Implementation and Results

Our study aims to create hybrid-parallel versions of two parallel streamline algorithms—parallelize-over-seeds and parallelize-over-blocks—and to better understand the performance of a hybrid-parallel implementation compared to a traditional, MPI-only implementation when run on a modern supercomputer comprised of multi-core processors.

As shown in previous studies, parallel streamline calculations in a distributed-memory setting can lead to poor load balance. When parallelizing-over-blocks, meaning the data set is partitioned and each processor performs streamline integration on the set of seeds that lie within a given data block, load imbalance can occur when the seeds are not distributed evenly across processors. This situation happens frequently when, for example, particles converge to sinks in a flow field. When parallelizing-over-seeds, meaning the seeds are distributed evenly across processors and each processor must load the data needed to perform integration for each of its seeds, performance can suffer as processors perform redundant data loads. This behavior is particularly problematic because I/O is typically the slowest part of the overall system. Our hybrid-parallel algorithms were designed to not only improve the performance of these algorithms in the general case, but also to greatly improve the performance in the problematic cases.

Our hybrid-parallel implementation for both types of parallel streamlines approaches centers around the idea of having, for each MPI task, multiple worker threads that share access to an application-managed shared-memory data cache. For example, if there are B bytes of memory on a processor having N cores, in MPI-only parallelism, each MPI task would have access to only B/N bytes of that memory. In the hybrid-parallel approach, all worker threads share access to a data cache that is on the order of B bytes in size. The benefit here is to reduce redundant data loads and data movement through the memory hierarchy. Also, the hybrid-parallel approach uses task parallelism to overlap I/O with computation for the parallelize-over-seeds approach, and to overlap interprocessor communication with computation for the parallelize-over-blocks approach.

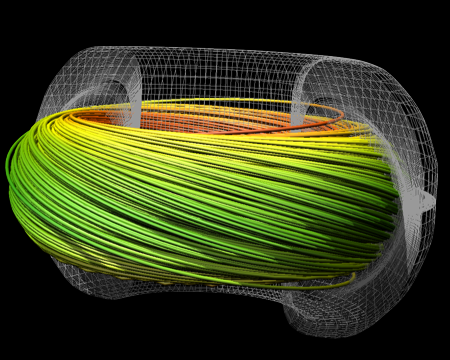

To test performance, our study used three different data sets to provide a range of coverage in the different types of vector fields (see Fig. 1 for visual examples of streamlines for each data set). The different types of vector fields span three different science disciplines, including astrophysics, fusion, and thermal hydraulics research. Our tests for these datasets were designed to cover a wide range of potential problem characteristics. Overall, there were four tests addressing all combinations of seed set size (small or large) and distribution (sparse or dense). We conducted our experiments with the \textsc{VisIt} visualization tool, and hence, the performance gains we observe at in this work will directly apply to real-world, production visualization scenarios.

Our findings indicate that the hybrid-parallel implementation ran faster, between 2x and 10x faster, than the MPI-only version. The hybrid-parallel version performed less data movement in the form of both interprocessor communication and I/O, which contributes to better runtime and better utilization of system resources. Additional details of this study appear in Camp et al. 2011 [1].

Impact

Although hybrid-parallelism increases implementation costs, this study provides additional evidence on how beneficial it can be. The results found that the hybrid-parallel streamline integration implementation performs between 2x and 10x better compared to an MPI-only implementation. The experiment was performed within the VisIt application, which is distributed to a worldwide scientific community. The modifications made to VisIt for the study are being productized and will be deployed in a future VisIt release. These modifications will benefit all science users employing flow-based methods for visualization or analysis in VisIt. In addition to these performance gains, the hybrid parallel approach is also known to have a significantly smaller MPI memory footprint, as evidenced in previous studies (e.g., Howison et al. 2012 [2]), therefore this hybrid-parallel approach enables analysis and visualization of larger data sets than would otherwise be possible using an MPI-only based implementation.

References

1. David Camp, Christoph Garth, Hank Childs, Dave Pugmire, and Kenneth I. Joy. Streamline Integration Using MPI-Hybrid Parallelism on a Large Multicore Architecture. IEEE Transactions on Visualization and Computer Graphics, 17(11):1702–1713, November 2011.

2. Mark Howison, E. Wes Bethel, and Hank Childs. Hybrid Parallelism for Volume Rendering on Large, Multi, and Many-core Systems. IEEE Transactions on Visualization and Computer Graphics, 18(1):17–29, January 2012. LBNL-4370E.