Instrumenting ibrvr with NetLogger

NetLogger is a tool for analyzing performance problems in distributed systems, with capabilities that include the generation and visualization of event logs. Event logs are generated by instrumenting code at critical points in the distributed system. The following summarizes the usage of NetLogger with the NERSC Visualization Group's image-based volume rendering program, ibrvr, as well as lessons learned.

Before using NetLogger

xntpd should be running on the machines running mtserver and mpiclient. Otherwise the event logs on the machine(s) will be out of sync, and the event-log visualization tool, nlv, will not be of much use. For IRIX and Solaris machines mounting callahan, load the "netlogger" module before running mpiclient and mtserver, i.e. "module load netlogger".

Currently ibrvr/config.csh controls where netlogger executables and libraries are taken from. This could also be done through loading the netlogger module, which setenv's NLOGHOME.

Make sure that you have an nlv-keys.cfg file set up before generating any logs. This will have information necessary for nlv to interpret events. There is a link to a nlv tutorial off the main NetLogger page, which includes material on the nlv-keys.cfg file format. The main nlv page contains further useful material.

C language API

$NLHOME/lib/libnetlogger.a contains the NetLogger API, and must be linked into the executable.

ibrvr/common/nlogwrap.c contains wrapper routines around the API to further simplify use. Only four routines need to be called to instrument ibrvr: setup_netlogger, netlog_mesg, netlog_flush, and close_netlogger.

void setup_netlogger(char *progname, char *clientname);

setup_netlogger takes two arguments, the name of the

program instrumented, and the host name where netlogd

is running. netlogd is a daemon that listens for

NetLogger messages and writes them to disk.

void netlog_mesg(int rank, int framenum, char *str);

netlog_mesg generates a NetLogger message that will be logged.

Its arguments are the id of the processing element calling it, the frame number

in a sequence of images being generated, and a string identifying the

event taking place. There must be a corresponding entry in nlv-keys.cfg for any event identifier. Messages are buffered in memory before being sent to

netlogd.

void netlog_flush(void);

netlog_flush forces a flush of the buffer.

void close_netlogger(void);

close_netlogger closes the connection to netlogd.

Instrumenting ibrvr

ibrvr consists of a multi-threaded server, and a client running on a parallel processor. The server uses pthreads, and the client uses MPI for synchronization and communication.

ibrvr is designed to be useful with a relatively small (4-8) number of processors on the client, with the same number of threads on the server. To set up event logging, the master thread on the server sets up a single connection to netlogd, and each thread uses this connection to log messages. Each processing element on the client sets up a separate connection to netlogd.

Events are logged at appropriate points in the distributed system to identify possible bottle-necks. Typically a call to netlog_mesg is made before and after such a critical point. An example would be before and after a write of a slice by the client, and before and after the corresponding read on the server.

The visualization produced by nlv works best if all events are strictly sequential within a given time step. Loops and gaps at best make the visualization difficult to follow and at worst confuse nlv to such an extent that it produces incorrect results. For example, events should be logged outside of a general purpose read routine. If they were logged inside, there would be a number of repetitions of the same sequence of event names.

Generating and viewing event logs

Run netlogd on the same machine as

mtserver:

netlogd -o filename

(This should be generalized to any machine in the future.)

Next run mpiclient and mtserver as usual.

Events are visualized using nlv.

nlv can

be used either after the fact, or in real-time during program execution.

Real-time usage still has some problems, however. You start up

nlv in the following manner:

nlv -k dirname filename

where filename was the name passed to netlogd. The -k option indicates the directory where the nlv-keys.cfg file is located.

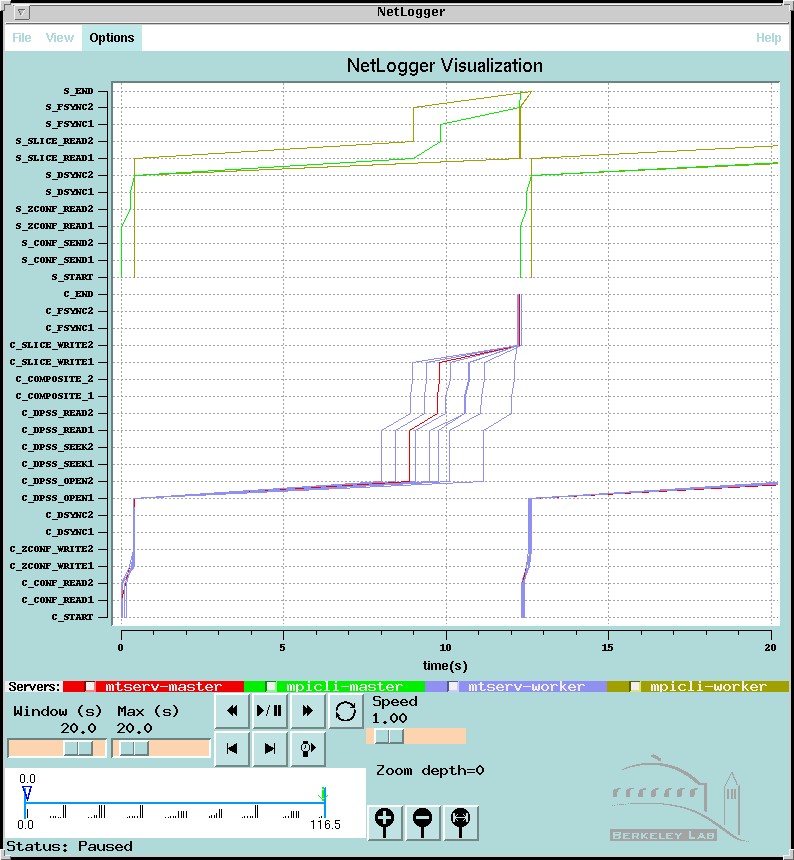

Snapshot of nlv with event log from running ibrvr

The following is a snapshot from running nlv. The red and purple event-lines are for events generated by mpiclient on 8 processors of Bill Saphir's cluster. Corresponding event names start with "C_" in this example. Brown and green event-lines were generated by mtserver. Corresponding event names start with "S_" in this example.

As can be seen from the snapshot, event names should be kept short. "Before" and "after" events are denoted by suffixes of 1 and 2 in most cases. Also note that all event names logged by the client are completely disjoint from the server. Attempting to have a common event set for both introduces complexity in instrumentation and produces a complicated and difficult-to-follow result.

The client events, in order for one time step, are:

C_START: start of time step

C_CONF_READ[1|2]: before and after reading configuration information from server

C_ZCONF_WRITE[1|2]: before and after sending z slice configuration info to server

C_DSYNC[1|2]: before and after sending command to server telling it is OK to begin sorting

C_DPSS_OPEN[1|2]: before and after doing a Distributed Parallel Storage System

(DPSS) open

C_DPSS_SEEK[1|2]: before and after doing a DPSS seek

C_DPSS_READ[1|2]: before and after doing a DPSS read of data

C_COMPOSITE_[1|2]: before and after image compositing

C_SLICE_WRITE[1|2]: before and after slice write to server

C_FSYNC1[1|2]: before and after telling server that all done transmitting slices

C_END: end of time step

The server events, in order, are:

S_START: start of time step

S_CONF_SEND[1|2]: before and after sending config info to client

S_ZCONF_READ[1|2]: before and after reading z slice configuration info from client

S_DSYNC1[1|2]: before and after receiving info that all config data has been sent from client

S_SLICE_READ[1|2]: before and after reading slice data

S_FSYNC[1|2]: before and after client has indicated all slice data has been sent

S_END: end of time step

Results

As can be seen from the log, which is fairly representative, most of the time is taken in the DPSS_Open call. DPSS_Open was not designed to be used repeatedly during a session.

If more time had been available before the SC 99 demo, NetLogger could have been more useful in tuning the application.

ibrvr is designed to be used with a small number of processors, with not much MPI communication on the client. For cases where there are a large number of processors, opening a connection to the NetLogger daemon for every processor would not be feasible.

In that case, one would open one connection to the server from a a designated processor on the parallel machine. I have written wrapper routines around MPI_Send and MPI_Recv which automatically send any pending NetLogger messages to the designated processor for forwarding to netlogd. However, the normal inter-processor communication must involve message passing between the designated processor and the other processors for this to work. The alternative is a gather step, which tends to be slow.