TECA

A Parallel Toolkit for Extreme Climate Analysis

A Parallel Toolkit for Extreme Climate Analysis

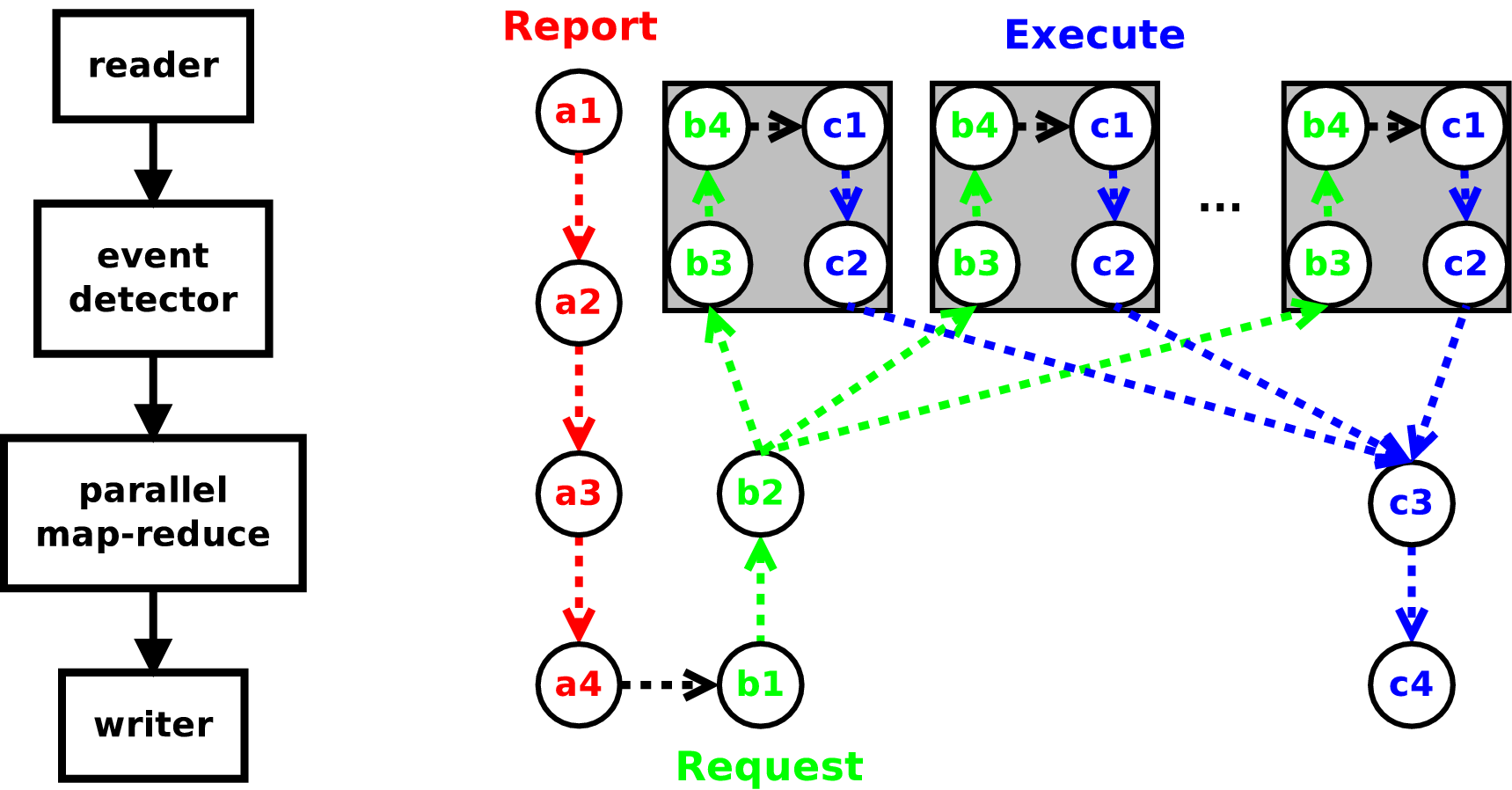

TECA(Toolkit for Extreme Climate Analysis). A collection of climate analysis algorithms geared toward extreme event detection and tracking implemented in a scalable parallel framework. The core is written in modern c++ and uses MPI+thread for parallelism. The framework supports a number of parallel design patterns including distributed data parallelism and map-reduce. Python bindings make the high performance c++ code easy to use. TECA has been used up to 750k cores.

Motivation

Integration of high resolution global climate models has become feasible on the current generation of DOE supercomputers. One of the principal motivations for these models are their superior representation of storms and extreme weather. Fine spatial grids are needed to capture these processes, as well as high frequency temporal output. Cumulatively, this has the effect of dramatically increasing the size of datasets that need to be processed by analysis software. Traditional serial analysis tools and methods are incapable of handling multi-terabyte datasets.

We are developing a number of tools that are capable of addressing these contemporary challenges. The codes are written in C++ and R and are capable of running on current desktop workstations as well as the largest DOE HPC platforms (NERSC, ALCF, etc). The tools follow best scientific data management practices and primarily utilize MPI for parallel execution on contemporary distributed memory, multi-core hardware.

Design

TECA has been designed from the ground up to leverage the emerging architecture of many-core systems. We have incorporated a number of event detectors and numerical methods and included Python bindings for ease of use.

More information on the design and features can be found in our AMS '16 talk.

Availablility

TECA is due to be publicly released in Q1 '16. The source code is available at the project's bitbucket repoContacts

Harinarayan Krishnan <hkrishnan@lbl.gov>Jeffrey Johnson <jnjohnson@lbl.gov>

Burlen Loring <bloring@lbl.gov>